I spent my day refactoring a Java application that was already performing well and doing its job. I usually enjoy moving code around myself, but I wanted to see if I could vibe-factor my way through the process by letting Claude handle the abstractions and move my beans and DAOs around to clean up the structure. This was a very tame refactoring, as this was just code restructure (I will write about my experience when I have asked it to review and rate code another time – that was an interesting experiment in of itself).

The code was not bad to begin with and I wanted to play with making it better and the results impressed me. I started by taking a few objects and making sure they were in the right place, and once that was finished I moved on to other classes that followed the same pattern. I used brave mode to run Linux commands which sped up the process significantly. It involved a lot of grep’ing and ls’ing of files to find what was needed, and it ran Maven commands so the agent understood the full consequences of the work it was doing.

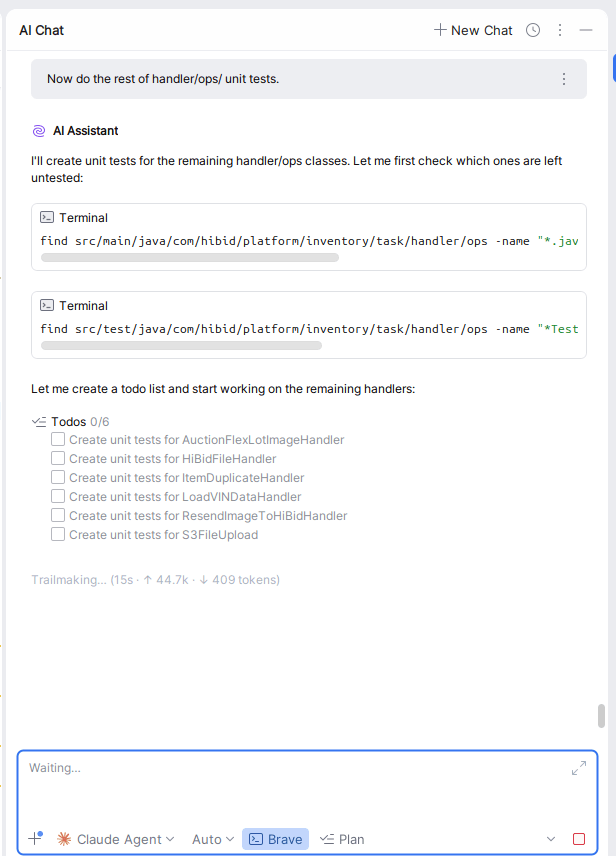

Once the refactoring was finished, I spent a time vibing Java unit tests with the agent. The experience was good overall and it achieved solid code coverage on the tests that most developers cannot be bothered to write themselves, including writing those edge cases to get into all the “if” nooks’ and crannies.

I will note the process felt slow because it took a long time for the agent to write everything, so I sent it off to work while I opened a second IDE to code on a different project. You could argue that having two projects going at once made me more efficient, but the reality involved a lot of mental context switching and constant checking that made the workflow feel fragmented.

The AI really excelled at handling the heavy mocking for the database and AWS services because that code is usually a pain to write. Everything went well until the tests actually started to run and SonarQube began throwing its toys out of the pram. Claude and SonarQube ended up arguing with each other over various flags, and I had to step in because SonarQube did not understand that certain variables were necessary for the tests even if they appeared unused in a subtle way.

This is where AI really excels because it takes away the drudgery of writing tests and refactoring and makes sure you have closed off the most obvious of holes.

I managed to burn through all my monthly credits in a single day because I was doing a lot of work in one session (comparing models was my Achilles heal). I used Cursor to set off a few agents in parallel and found that there was not a huge difference between the various models.

We are reaching a point where the specific model does not matter. It is a Pepsi vs. Coca Cola world – still coke but a slightly different taste. This is a problem for the AI companies who are constantly trying to hype how their model is the best. Especially nowadays with so much money being invested, they need to show a return. I am anxiously waiting for the day adverts will start popping up in my coding prompts (I see you are trying to get Postgres to perform, should you maybe consider Oracle instead with its excellent support).

The reality is the differences are becoming negligible, with no real customer lock-in or loyalty. I will happily move to the cheapest monthly model in a heart beat – and developers are not known for spending money at the best of times.

Very happy AI is finishing off the tasks I don’t like doing, but needs to be done.

AI Disclaimer: Gemini Nano Banana Pro was used to generate the photos – from the ’80 The Shining movie with me as Jack Nicholson